How to Deploy Stable Diffusion on Dedicated GPU Servers for Maximum Performance

Deploying Stable Diffusion on Dedicated GPU Servers means installing and running the Stable Diffusion AI Image Generator on a high-performance computer with a strong GPU so it can create images faster and more reliably.

As AI workloads grow, picking the right hosting environment matters more than ever. With strong NVIDIA GPUs, fast NVMe storage, and flexible setup choices, PerLod Hosting gives you a solid base for running Stable Diffusion in production.

In this guide, we use a Linux GPU server using the popular AUTOMATIC1111 Web UI to deploy the stable Diffusion.

Table of Contents

Prerequisites to Deploy Stable Diffusion on Dedicated GPU Servers

With this setup guide, you will have a fully working GPU server that automatically runs Stable Diffusion WebUI with drivers, models, optimizations, and example setups ready to use.

Before you start the setup, you need a GPU Dedicated Server and basic software setup, including:

Hardware:

- 12 GB VRAM (like RTX 3060/T4): Good for smaller images.

- 24 GB VRAM (like RTX 4090/L40/A5000): Good for bigger images and higher batches.

- 40 GB+ VRAM (like A100/A6000/L40S): Best for large models, training, or multiple users.

Software:

- Ubuntu 22.04 or 24.04.

- SSH access with sudo.

- NVIDIA drivers installed.

To check the drivers, run the command below:

nvidia-smiYou must see your GPU drivers; if not, fix it first.

Once your are met these requirements, proceed to the following steps to start deploying Stable Diffusion on dedicated GPU servers.

Step 1. Prepare GPU Server For Stable Diffusion AI Image Generator

You must prepare the GPU-dedicated server by updating its software and installing the required tools.

Run the system update and upgrade with the commands below:

sudo apt update

sudo apt upgrade -yInstall required packages, build tools, and Python toolchain with the command below:

sudo apt install git python3 python3-venv python3-pip \

wget ffmpeg libgl1 libglib2.0-0 -yVerify Python installation by checking its version:

python3 --version

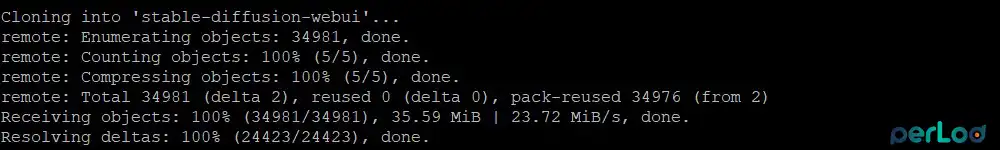

pip3 --versionStep 2. Download Stable Diffusion WebUI Repository

At this point, you must download the main Stable Diffusion AI Image Generator from Git, which gives you the visual interface.

Navigate to the /opt directory and use the Git command to clone the Stable Diffusion WebUI repository:

cd /opt

sudo git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

Set the correct ownership for the Stable Diffusion with the command below:

sudo chown -R "$USER":"$USER" stable-diffusion-webuiThen, navigate to the Stable Diffusion directory with the command below:

cd stable-diffusion-webuiYour working directory is:

/opt/stable-diffusion-webuiStep 3. Add Stable Diffusion Model File

Stable Diffusion requires a model file to generate images. You can think of it as the brain that has learned how to create images.

These model files belong in the Stable-diffusion folder:

/opt/stable-diffusion-webui/models/Stable-diffusion/You have two options to add the models, including:

Option A: Upload from your local machine

From your local machine, you can run the command below with your real values:

sudo scp path/to/your_model.safetensors user@YOUR_SERVER_IP:/opt/stable-diffusion-webui/models/Stable-diffusion/Option B: Download directly from a URL

If you have a direct link, navigate to the models directory and download the URL:

cd /opt/stable-diffusion-webui/models/Stable-diffusion/

sudo wget "https://example.com/path/to/your_model.safetensors"Note: Some models on Hugging Face need your personal access token and approval before you can download them, so use those links with your own login details when required.

Once you are done, verify the file with the command below:

ls -lh /opt/stable-diffusion-webui/models/Stable-diffusion/In your output, you must see at least one .safetensors or .ckpt file.

Step 4. First Manual Stable Diffusion Run

To verify everything is set up correctly, you need to run the program manually for the first time.

Note: The first time you run this, it will take several minutes to finish. This is normal as it downloads and sets up the rest of the required software.

From the Stable Diffusion directory, run the webui.sh script with the basic GPU optimisations:

cd /opt/stable-diffusion-webui

sudo ./webui.sh --listen --port 7860 --xformersFlags used in the command:

- –listen: Bind to 0.0.0.0 so you can reach it from your browser.

- –port 7860: Default port.

- –xformers: Enables memory-efficient attention for better performance.

When you see something similar to this:

Running on local URL: http://0.0.0.0:7860From your browser, you can open:

http://YOUR_SERVER_IP:7860If it works and you can generate images, you know the baseline is OK.

Optimize GPU Memory for Better Performance in Stable Diffusion

Stable Diffusion can consume a lot of VRAM. To make it run faster and use less of your memory, you can save some special settings in a file, which means you won’t have to type them out every time you start the program.

Create or edit webui-user.sh in the project directory with the commands below:

cd /opt/stable-diffusion-webui

sudo nano webui-user.shAdd the following settings to the file:

#!/usr/bin/env bash

# Common for servers

export COMMANDLINE_ARGS="\

--listen \

--port 7860 \

--xformers \

--enable-insecure-extension-access \

--opt-sdp-attention \

--opt-channelslast \

--api \

"Once you are done, save and close the file. Make the file executable with the command below:

sudo chmod +x webui-user.shSimply run the script with the default settings you have defined:

sudo ./webui.shTypical flags for GPU memory:

For 12 GB GPU (T4, 3060):

--xformers --medvram --opt-sdp-attention --opt-channelslastFor 24 GB GPU (4090, L40):

--xformers --opt-sdp-attention --opt-channelslastFor 40+ GB (A100, A6000):

--xformers --opt-sdp-attention --opt-channelslastNote: –medvram uses less GPU memory but runs slower. Turn it on if you get “CUDA out-of-memory” errors.

Set up Stable Diffusion System Service

To make sure Stable Diffusion starts automatically when your server boots and runs reliably in the background, you can set it up as a system service.

Create a dedicated user account to run the service with the commands below:

sudo useradd -m -s /bin/bash sduser

sudo usermod -aG sudo sduser

sudo chown -R sduser:sduser /opt/stable-diffusion-webuiVerify it with the following command:

ls -ld /opt/stable-diffusion-webuiYou should see sduser as the owner and group.

Create the Stable Diffusion systemd unit file with the command below:

sudo nano /etc/systemd/system/stable-diffusion.serviceAdd the following sample config to the file:

[Unit]

Description=Stable Diffusion WebUI (AUTOMATIC1111)

After=network.target

[Service]

Type=simple

User=sduser

Group=sduser

WorkingDirectory=/opt/stable-diffusion-webui

Environment="PYTHONUNBUFFERED=1"

# Optional: limit visible GPUs (e.g. use only GPU 0)

# Environment="CUDA_VISIBLE_DEVICES=0"

ExecStart=/bin/bash -lc "./webui.sh"

Restart=on-failure

RestartSec=10

# Resource limits (tune if needed)

# LimitNOFILE=65535

[Install]

WantedBy=multi-user.targetSave and close the file.

Reload the system daemon and start the Stable Diffusion service with the commands below:

sudo systemctl daemon-reload

sudo systemctl enable stable-diffusion

sudo systemctl start stable-diffusionCheck that the service is active and running with the following command:

sudo systemctl status stable-diffusionOnce it is running, you can access it from your browser:

http://YOUR_SERVER_IP:7860Use Nginx Reverse Proxy For Stable Diffusion (Optional Security)

At this point, your Stable Diffusion interface is accessible by anyone who knows your server’s IP address and port. To make it secure, you can use Nginx as a reverse proxy for it, which acts as a secure gateway.

First, update the default flags to bind only to localhost by editing the webui-user.sh file:

cd /opt/stable-diffusion-webui

sudo nano webui-user.shUpdate the settings as shown below:

export COMMANDLINE_ARGS="\

--listen 127.0.0.1 \

--port 7860 \

--xformers \

--opt-sdp-attention \

--opt-channelslast \

--api \

"Once you are done, restart the service to apply the changes:

sudo systemctl restart stable-diffusionInstall Nginx with the command below:

sudo apt install nginx -yCreate a sample Nginx server block file with the command below:

sudo nano /etc/nginx/sites-available/stable-diffusion.confAdd the following configuration with your domain name to the file:

server {

listen 80;

server_name your-domain.com;

location / {

proxy_pass http://127.0.0.1:7860/;

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}Enable the file and check for any syntax errors with the commands below:

sudo ln -s /etc/nginx/sites-available/stable-diffusion.conf /etc/nginx/sites-enabled/

sudo nginx -tIf everything is OK, reload Nginx to apply the changes:

sudo systemctl reload nginxNow you can access Stable Diffusion via:

http://your-domain.comTip: For more security, you can use Let’s Encrypt and Certbot to generate SSL certificates for your domain.

Create a Backup and Update Stable Diffusion

It is important to regularly back up your work and update the software. In this step, you will learn how to create a backup of your important files and how to safely update to the latest version of Stable Diffusion when it becomes available.

Main files you need to back up include:

- /opt/stable-diffusion-webui/models/

- /opt/stable-diffusion-webui/embeddings/

- /opt/stable-diffusion-webui/extensions/

- Any custom configs or scripts you created.

You can create an archive from the file you need with the command below:

cd /opt

sudo tar czf sd-webui-backup-$(date +%F).tar.gz stable-diffusion-webuiCopy this archive to a safe storage location.

To update Stable Diffusion, you need to stop the service and run the Git pull command to get the latest version and start the service:

sudo systemctl stop stable-diffusion

cd /opt/stable-diffusion-webui

sudo git pull

sudo systemctl start stable-diffusionIf dependencies change, the next start may take a bit longer while it updates them.

FAQs

What hardware do I need to run Stable Diffusion efficiently?

Stable Diffusion performs best on servers equipped with NVIDIA GPUs that support CUDA. A minimum of 8–12 GB VRAM is recommended for basic generation tasks, while professional workloads require 24–48 GB VRAM or more.

Which operating system is best for deploying Stable Diffusion?

Linux distributions like Ubuntu 22.04 and 24.04 are the most widely supported and provide smooth compatibility with NVIDIA drivers, CUDA, and machine learning frameworks.

How much storage do Stable Diffusion models require?

Model sizes vary from 2 GB to 7 GB+, depending on the architecture. Extensions, LoRAs, embeddings, and checkpoints can easily add tens of gigabytes more. Using fast NVMe storage is highly recommended.

Conclusion

Deploying Stable Diffusion on Dedicated GPU Servers is one of the most powerful ways to unlock the full potential of AI-driven image generation. With proper configuration, optimized performance settings, and a reliable hosting environment, you can achieve fast rendering speeds and stable operation for your projects and production workloads.

Remember that a powerful hosting provider makes all the difference. PerLod offers the best infrastructure for running Stable Diffusion smoothly.

We hope you enjoy this guide. Subscribe to our X and Facebook channels to get the latest articles on AI and GPU Hosting.